Master Linux Text Processing Commands with Our Comprehensive Guide

Table of Contents

Text processing in Linux refers to manipulating, analyzing, and managing textual data using various command-line tools and utilities available in the Linux operating system. It involves performing a wide range of tasks on text data, such as searching, filtering, sorting, formatting, and extracting information from text files or streams of text.

Imagine effortlessly sifting through mountains of log files to find that elusive error message, extracting valuable data from a cluttered dataset, or formatting text neatly for presentation. All of this and more is easily achievable with the arsenal of text processing tools at your disposal. These commands are mostly efficient in particular scenarios, such as Debugging or handling large amounts of data.

In this tutorial, I will walk you through the concept of text processing in Linux and various commonly used text processing commands and highlight its benefits. You will learn how to use these text-processing commands to simplify your debugging and complex tasks.

Basic Text Editing Commands

When working with Linux, you deal with many files or texts, and you need these commands to increase productivity as a developer. These commands allow you to navigate, edit, and format your text efficiently. Here are some commonly used text editing commands

cat: Displaying the Content of Files

cat (short for "concatenate") is a simple but powerful command in Linux that is primarily used to display the content of text files in the terminal. It's often used for quick inspection of file contents.

Here's how to use a cat:

$ cat filename

Example output:

You can use --help flag with the cat to elaborate more cat command option.

$ cat --help

Output:

nano and vim: Basic Text Editors for Creating and Editing Text Files

nano and vim are two popular text editors in Linux. They are used for creating, viewing, and editing text files.

nano is a straightforward and user-friendly text editor. To create or edit a file, simply type:

$ nano filename.txt

After running the above command, you’ll see a new window opened which is a nano editor

Output:

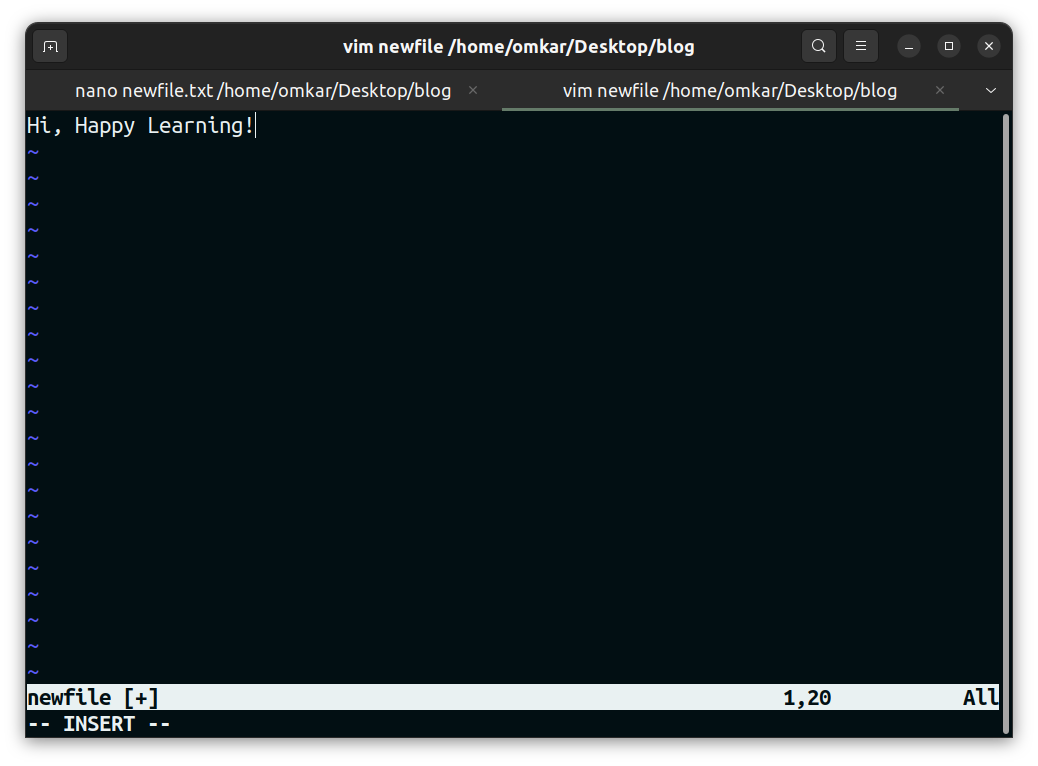

Similarly, vim is a more advanced and powerful text editor but has a steeper learning curve. To create or open a file in Vim run

$ vim newfile

After you open a new text editor, you need to go into INSERT Mode by pressing i

Output:

When inside nano or vim, you can edit the file, save changes, and exit. In nano, you can use basic keyboard shortcuts displayed at the bottom of the terminal, as you can see in the above output. In Vim, you need to switch between different modes of the terminal (insert, command, and visual) to edit and save files.

echo: Printing Text to the Terminal

The echo command displays text or variables on the terminal screen, and it is frequently used in shell scripts to offer feedback to users or present the information. Let’s go through some examples below.

Print a simple message to the terminal:

$ echo "Hello, World"

Output:

Hello, World

Display the value of a variable:

$ myVar="This is my text."

$ echo $myVar

Output:

This is my text.

Pipes and Redirection in Linux

The Linux shell has two extremely useful tools that let you combine commands and reroute their input and output: “pipelines and redirection”. If one wants to use the shell efficiently, these are necessary tools.

Piping in Linux

Linking one command's output to another's input is known as “piping”. This enables you to execute complicated activities by chaining together commands. The vertical bar (|) is the pipe operator.

The following command calculates the number of lines in each file after listing every file in the current directory.

$ ls | wc -l

The wc -l command receives the output of the ls command and counts the number of lines in its input

Example output:

3

Another example:

$ echo "Hello, World" | tr \[a-z\] [A-Z]

Output:

HELLO, WORLD

In the above command, you have taken the first command's output and run it through the tr command, which capitalized every character.

Redirection in Linux

Redirection is a way of changing a command's default input or output. This allows you to save the output of a command to a file or to read input from a file instead of the keyboard. The following operators are used for redirection purposes.

The output of a command can be redirected to a file using the > operator:

$ curl -L https://github.com/kubernetes/kubernetes/blob/master/README.md > README.md

The above command reads the content of the README.md file of the Kubernetes repository and writes its content on your local system to the README.md file.

The >> operator is used to write to a new file or append a command's output to the end of an already-existing file.

Let’s say you have one file locally called numbers.txt and have content like this:

$ cat numbers.txt

one

two

Using the >> operator, you can append your text at the end of this file.

$ echo "three" >> numbers.txt

Now check the contents of the file using the cat command:

$ cat numbers.txt

one

two

three

Note: If you use only the > operator, the file content is replaced with “three”

The < operator reads input from a file and then acts upon it.

Example:

$ wc -l < numbers.txt > lines.txt

The command above retrieves the line count using the wc command from the file called numbers.txt and then directs this line count to be saved in a file named 'lines.txt'.

The operator called 2> redirects the errors to your desired file. Suppose you’re listing docker containers running on your machine using the $ docker ps command, but you face some error; you can redirect the output.

Example:

$ docker ps 2> error.txt

Output:

Text Processing Commands in Linux:

Text processing commands in Linux are essential tools for working with text files and manipulating textual data. These commands provide a wide range of functionalities, including searching, editing, extracting, sorting, and transforming text.

Below are some of the commonly used text-processing commands:

The grep command

grep is a command-line utility for searching text files using regular expressions. It allows you to find lines that match a specific pattern or expression. Regular expressions provide a powerful way to specify complex search patterns, including character sequences, wildcards, and repetition rules.

Example:

If you want to search for the name of the tool in a text file, use:

$ grep "Github" tool

Output:

Note: You can use grep -i option to perform a case-insensitive search.

Find all lines starting with the letter "G" in a file called “tools":

$ grep "^G" tools

Output:

The sed command:

sed is a stream editor that enables non-interactive text manipulation. It allows you to modify, replace, or delete text patterns in files. It works by processing text line by line, applying a set of editing commands specified in a script or directly on the command line.

Let's consider this example that searches kubernetes and replaces it with k3s

$ sed 's/kubernetes/k3s/g'

In the above command:

smeans that we want to substitute a word,kubernetesis the word we want to substitute andk3sis the word that we want to substitute forkubernetes.- The letter

gin the command indicates that we want it to be executed globally, replacing each instance of the match.

If you execute above mentioned command on the line below:

kubernetes kubernetes kubernetes kubernetes

You will get,

Output:

k3s k3s k3s k3s

Another example is if you want to delete an error word in the file logs.txt

$ sed '/error/d' logs.txt

After execution of this command, all occurrences of the word “error” would be deleted.

The awk command:

awk is a versatile text processing tool for extracting and manipulating data. It processes text files line by line, allowing you to perform calculations, format output, and generate reports. It uses a pattern-action paradigm, where you specify patterns to match and actions to perform on matching lines.

For example: If you have data.csv file and you want to filter the content as you want, you can do it with awk command as below

$ cat data.csv

Output:

index,ean,stock,price

1,2010743556564,669,135

2,2668829157992,476,584

3,0429683856399,875,44

4,2548029150224,77,251

5,3442300385932,742,737

To get the stock field which is the third field:

$ awk -F , '{print $3}' data.csv

Output:

stock

669

476

875

77

742

The file will be read by “awk” as a comma-separated value (CSV) due to the -F flag in the earlier command setting the field separator to , . With **{print $2}**, **awk** goes through each line of the file and prints the second field with.

Calculate the sum of the third column in a file called "data.csv"

$ awk '{ sum += $3 } END { print sum }' numbers.txt

The sum += $3 will take the sum of all the values in the third column, and { print sum } will print the output to your terminal.

Output:

2839

The sort command:

sort is used for sorting lines of text in a file. You can sort alphabetically, numerically, or based on custom criteria. It can handle various data types, including numbers, strings, and alphanumeric combinations.

Sort the file tools.txt alphabetically with the sort command below:

$ sort tools.txt

Output:

Sort the lines of a file called "numbers.txt" numerically:

Content of the file:

Run the following command

$ sort -n numbers.txt

Output:

You can see the difference between the two outputs:

The tac command

The tac command in Linux is used to reverse the contents of a text file, displaying the lines in reverse order, from the last line to the first. "tac" is essentially "cat" spelled backward, emphasizing its purpose of reversing text.

Here is how you can use the tac command

Let’s say you want to execute the tac command on file.txt:

$ cat file.txt

line 1

line 2

line 3

line 4

$ tac file.txt

Output:

line 4

line 3

line 2

line 1

Conclusion

This article describes how you can perform various text-processing commands and how you can increase your productivity while working with a Unix-like system. Also, this article covers many examples along with use cases.

There is always something new to learn about Linux, to continue your learning, checkout the following resources:

The Practical DevOps Newsletter

Your weekly source of expert tips, real-world scenarios, and streamlined workflows!