Upgrading Your EKS Cluster from 1.22 to 1.23: A step-by-step guide

Table of Contents

This article was originally published on Gagher Theophilus's blog.

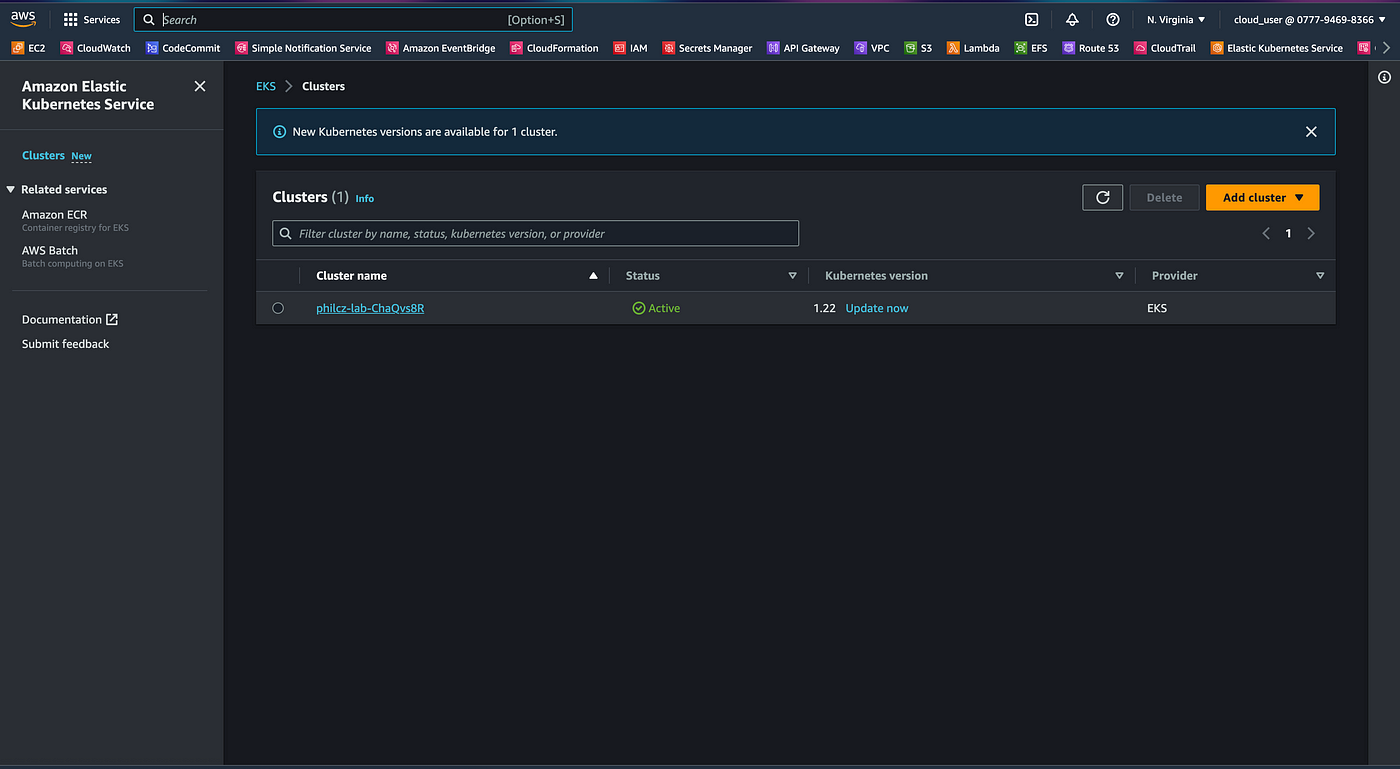

Amazon Elastic Kubernetes Service (EKS) is a popular managed Kubernetes service that enables you to run Kubernetes workloads on AWS without the operational overhead of managing your own Kubernetes clusters. EKS provides a highly available and scalable platform for running your containerized applications in production. Kubernetes is evolving rapidly, with new features and bug fixes released frequently. EKS keeps pace with Kubernetes by providing regular updates to the platform. In this article, we will discuss how to upgrade your EKS cluster from version 1.22 to version 1.23 using EKS rolling update to upgrade the worker groups.

Why Upgrade Your EKS Cluster?

Upgrading your EKS cluster to the latest version of Kubernetes brings a host of benefits, including new features, bug fixes, and security updates. New Kubernetes versions also address performance and scalability issues, improving the overall stability and reliability of your cluster. Staying current with the latest Kubernetes version ensures that you can take advantage of the latest innovations in container orchestration technology.

Encore is the Development Platform for building event-driven and distributed systems. Move faster with purpose-built local dev tools and DevOps automation for AWS/GCP. Get Started for FREE today.

Step 1: Review the Release Notes

Before you begin upgrading your EKS cluster, it is essential to review the release notes for the new Kubernetes version. The release notes provide important information about the changes and improvements in the new version. Reviewing the release notes ensures that you understand any breaking changes or deprecations that may affect your workloads.

Step 2: Back Up Your EKS Cluster

Upgrading your EKS cluster is a critical operation that can potentially disrupt your production workloads. Therefore, it is essential to back up your EKS cluster before starting the upgrade process. You can use several tools to back up your EKS cluster, including Velero, which is an open-source tool for backing up Kubernetes clusters.

Step 3: Upgrade Your EKS Cluster Control Plane

The EKS cluster control plane manages the Kubernetes API server and other core components of the Kubernetes control plane. Upgrading the control plane is the first step in upgrading your EKS cluster. You can upgrade the control plane using the EKS console, the AWS CLI, the eksctl command-line tool or if you deploy your EKS cluster with an Infrastructure as a code (IaC) tool like terraform.

To upgrade the control plane using the EKS console, follow these steps:

- Open the EKS console and navigate to your cluster.

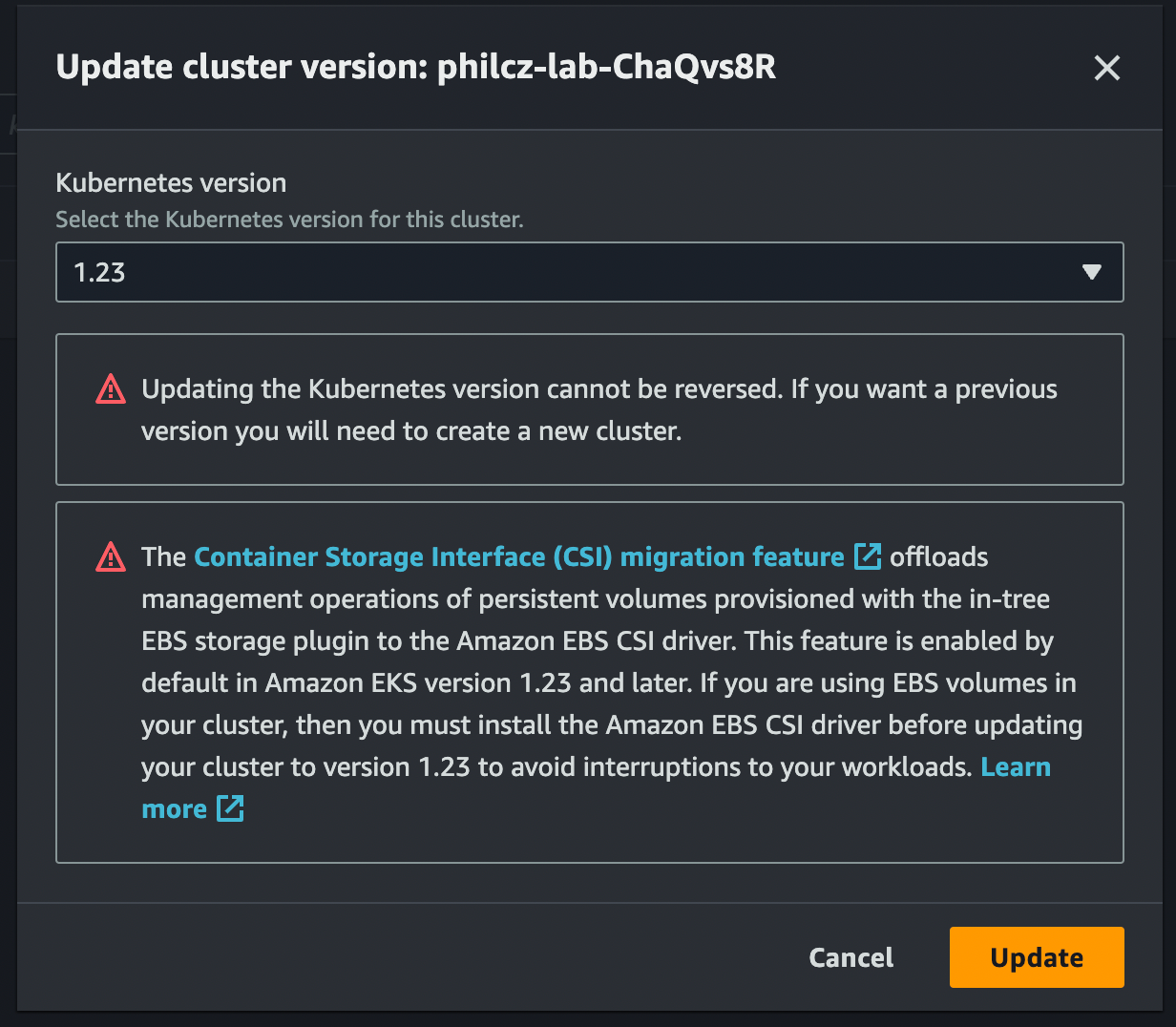

Click the “Update” button in the upper right corner.

Select “Upgrade control plane version” from the drop-down menu.

Select the new Kubernetes version (1.23) and click “Upgrade control plane.”

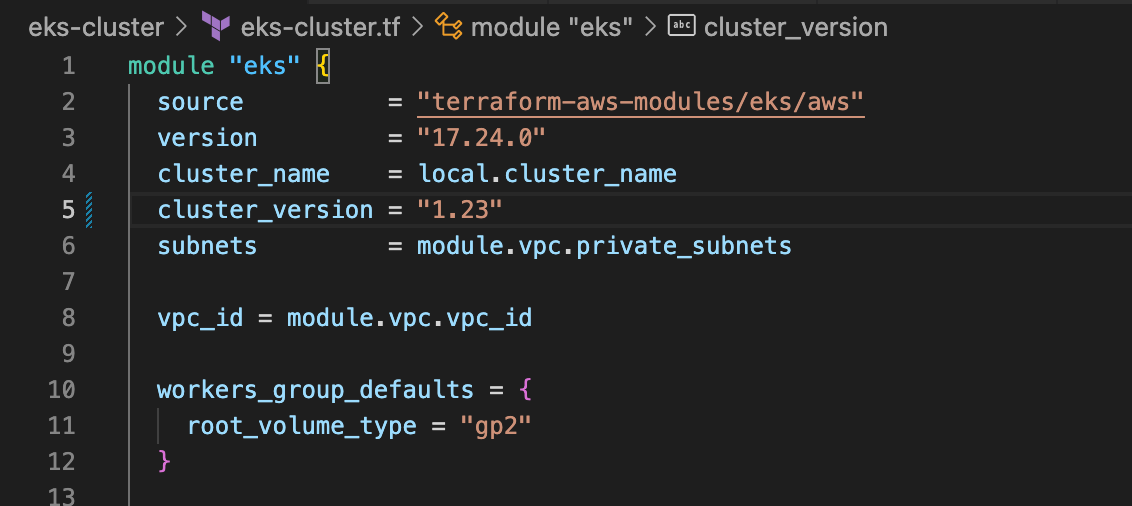

To upgrade the control plane using terraform:

Note: I changed the cluster version from 1.22 to 1.23, and applied the changes.

The control plane upgrade process typically takes several minutes to complete. During the upgrade process, the control plane is unavailable, and you cannot perform any Kubernetes API operations.

Step 4: Upgrade Your EKS Cluster Nodes

After upgrading the control plane, you need to upgrade your EKS cluster nodes. You can upgrade your nodes using the EKS console, the AWS CLI, the eksctl command-line tool or using the EKS rolling update tool for this article, we will explore using the EKS console and EKS rolling update.

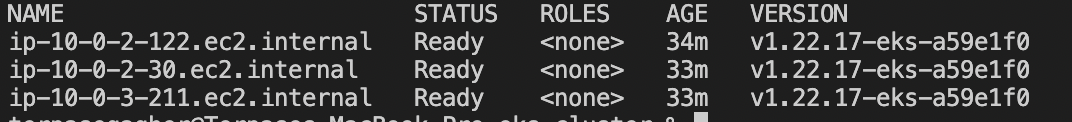

Our control plane has been upgraded to 1.23 however, the worker nodes are still running v1.22

EKS rolling update:

EKS Rolling Update is a utility for updating the launch configuration or template of worker nodes in an EKS cluster. It updates worker nodes in a rolling fashion and performs health checks of your EKS cluster to ensure no disruption to service.

Requirements:

- kubectl installed

KUBECONFIG environment variable set, or config available in${HOME}/.kube/configper default - AWS credentials configured

Installation:

$ pip3 install eks-rolling-update

#!/usr/bin/env bash

# This script does a graceful rolling upgrade of an EKS cluster using https://github.com/hellofresh/eks-rolling-update

# It's needed when worker node groups have changes (AMI version, userdata, etc.)

set -euo pipefail

export K8S_CONTEXT="<cluster context>"

export AWS_DEFAULT_REGION="us-east-1"

export AWS_ACCESS_KEY_ID="<access key>"

export AWS_SECRET_ACCESS_KEY="<secret>"

export K8S_AUTOSCALER_ENABLED="True"

export K8S_AUTOSCALER_NAMESPACE="cluster-autoscaler"

export K8S_AUTOSCALER_DEPLOYMENT="cluster-autoscaler-aws-cluster-autoscaler"

# Forcing drain is required because nodes may have pods not managed by any replication controller and therefor fail normal draining

export EXTRA_DRAIN_ARGS="--force=true"

# Wait 30s between node deletion and taking down the next one in ASG just in case something didn't finish replication in time

export BETWEEN_NODES_WAIT="30"

# Retry operations a bunch of times as things may roll slowly on large clusters

export GLOBAL_MAX_RETRY="30"

export DRY_RUN="true"

# If True, only a query will be run to determine which worker nodes are outdated without running an update operation

export ASG_NAMES="philcz-lab-ChaQvs8R-worker-group-120230420130327830200000017"

eks_rolling_update.py --cluster_name philcz-lab-ChaQvs8R

Encore is the Development Platform for building event-driven and distributed systems. Move faster with purpose-built local dev tools and DevOps automation for AWS/GCP. Get Started for FREE today.

Usage:

Update the upgarde.sh script to roll over your nodes.

$./upgarde.sh

The node group upgrade process typically takes several minutes to complete. During the upgrade process, your nodes are unavailable, and your workloads may experience disruptions.

The Practical DevOps Newsletter

Your weekly source of expert tips, real-world scenarios, and streamlined workflows!